UNESCO strongly believes in the power of digital technologies, including AI, to reshape education. Yet, the UN agency is concerned about “equity, privacy, and the ethical use of technology.” Nearly 87% of schools across the world have integrated AI tools in K-12 education. As AI becomes a default feature in digital learning platforms, chatbots will naturally play a larger and larger role. The catch is, not all AI is built for education. Generic chatbots do not comply with educational standards. They can compromise student privacy and online safety, and perpetuate misinformation. Who hasn’t heard of misguidance at the hands of famous AI models? Such incidents bring the risk of unmonitored AI use, especially by young minds, to the fore. Schools and districts cannot risk it. They seek AI learning assistants designed specifically for student use.

Why Generic AI Chatbots Don’t Work in Education

Generic chatbots aren’t safe, and here’s why:

Uncontrolled Training Database

These systems are trained on the uncontrolled internet with public web sources. This means they can “learn” incorrect things and spread misinformation.

No Academic Integrity Guardrails

Generic chatbots do not have built-in academic rules, unlike AI learning assistants designed for K-12 education. They end up hindering learning, instead of driving it. These engines quickly give direct answers without pushing students to try harder or offering tools to bridge learning gaps.

Weak Access Controls

Improper access controls create serious privacy risks for students, violating essential privacy laws. This puts the edtech solution and the learning institution at risk of penalization.

One-Size-Fits-All Solutions

Unlike K-12-focused AI learning assistants, which work in sync with syllabi, generic chatbots are disconnected from the curriculum or lesson plan. For these tools, every query is a conversation starter, and they can easily distract students from learning.

What “Safe AI for Education” Actually Means

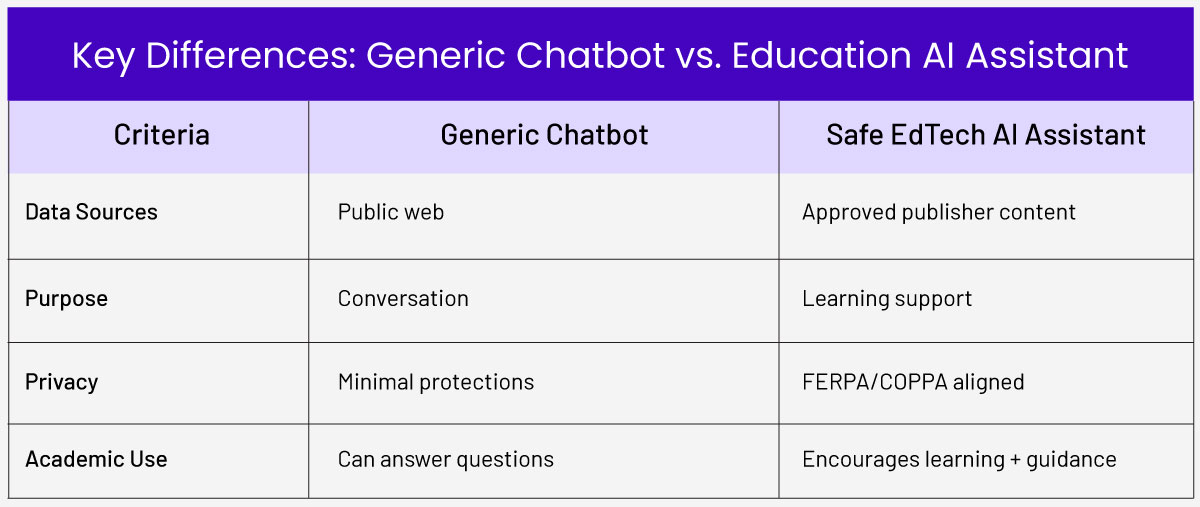

Safe AI is fundamentally different from a generic chatbot. Its purpose is learning support, not answering every query. These discourage random conversations and are directly aligned with learning outcomes. By design, safe AI pushes students toward deeper understanding. AI learning assistants, such as MagicBox’s KEA, are designed for K-12 education.

Age-appropriate guardrails prevent inappropriate content access and exposure. These tools filter harmful or toxic responses and assess students’ mental state to prioritize their safety at all times.

Safe AI means strict data protection, which is achieved through compliance with privacy laws, such as COPPA, FERPA, and GDPR. This commitment to data safety builds trust with districts. Plus, compliance with WCAG standards ensures accessibility for every student.

Most importantly, safe AI learning assistants do not work in isolation. They offer clear escalation paths and complete visibility to teachers. Advanced AI models also alert teachers when suspicious student behaviour is identified. Educators then can intervene where needed. Plus, this assures teachers of their value in the digital learning ecosystem, without threatening their role as drivers of learning.

Delivering a Safe AI Experience with K-12 Publishing

The digital learning space is intensely regulated. K-12 publishers, therefore, need a controlled system with compliant APIs. This is the foundation of safe AI learning assistants.

You need to start with a private knowledge base, controlling the training dataset for the AI model. This model is programmed to use only approved educational content and pull only the information relevant to the curriculum. This ensures high accuracy, while preventing misinformation, which then builds immediate academic credibility.

Such models have a pedagogical logic layer, which guides the AI bot’s actions. It forces the bot to ask probing questions to encourage students’ critical thinking and reasoning skills. It also prevents the AI from giving direct responses and turning into an answer machine rather than a tool to enhance learning achievement.

Compliance is not optional for schools. Safe AI assistants must be compliant by design. They separately secure and store student data in tightly controlled repositories. All access is monitored via DRM systems to protect student privacy.

Plus, safe AI learning assistants are not standalone tools. These are deeply integrated within teaching and learning workflows. These connect with specific assignments, students’ learning stages, etc., to offer relevant, timely guidance. This is not possible with generic AI tools, which don’t have the necessary contextual links to the LMS.

Safe AI is the Next Differentiator

Building a safe AI assistant shows you care about students. It proves your product is responsible, which in turn drives adoption.

Education needs reliable, unbiased, and safe AI. Pedagogically sound AI learning assistants support the teacher responsibly. Publishers who want to achieve long-term market leadership must see AI as a safety commitment. Speak to the MagicBox team to discover how this commitment can be fulfilled consistently.